Cloud Computing and the Growth of Systems Integration [Continued]

Thanks for returning for part two! As I hope you recall, part one of this article focused on cloud computing. Now we shift our focus to systems integration and its profound influence on how technology is delivered today.

Part two: systems integration

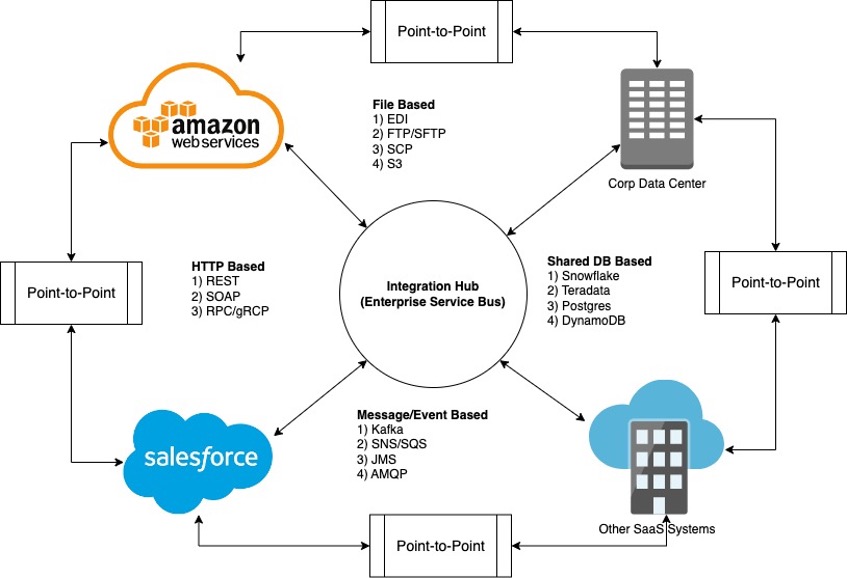

The key here is the sharing of data or functionality among systems to reduce duplication of information or behavior. This sharing typically takes place over a network and is generally delivered through a handful of means like remote procedure calls over HTTP, messaging, file transfers or a shared database. Integration is usually characterized by either point-to-point direct interactions or through an intermediary that decouples interactions via message brokers or an enterprise service bus.

Shared database integration

Integration through a shared database is a very popular long-standing pattern featuring a single source of data made available to multiple applications to support various business capabilities. Having a single database as the data source reduces the need to duplicate essential datasets like customers and products across different domains of the business, such as finance, procurement, marketing and order processing. Because of popular libraries and frameworks, data access programming against traditional databases is relatively simple so development teams can quickly churn out functionality for market testing or to meet tight delivery deadlines. However, this also creates tight coupling to the structure, format and available access patterns constrained by the database’s data model design.

This leads to complex deployment management across applications, difficult to debug issues that cascade across systems, and introduces the risk of an innocent coding mistake overrunning the shared database resulting in degradation in seemingly unrelated systems. For these reasons, the shared database pattern has fallen out of favor in most enterprise architectures that are operational in nature. However, shared data warehouses and data marts still pose a lot of value for reporting and analytics.

File transfer-based integration

Integrating systems through the transfer of files is another well-established technique. In this integration pattern, protocols like SFTP, FTP, FTPS and SCP are used to make discrete whole files available for consumption by multiple systems. In this way, important datasets can be shared centrally, but data integrity must be treated judiciously since one system can easily remove or override these shared files and most of the commonly used file formats lack descriptive metadata or any enforcement of data structures.

When using this integration pattern, issues can occur from deleting or overriding a file at the wrong time, resulting in incomplete processing or handling of business logic. Another common source of trouble may result from changes to the file structure often leading to breaks in downstream system parsing or processing logic. This is often mitigated by introducing a staging area for data quality checks.

HTTP-based integration

HTTP-based techniques are used widely and operate by a communication framework or protocol over an HTTP-based connection like representational state transfer (REST), simple object access protocol (SOAP) or remote procedure call (RCP/gRCP). This pattern can be equally used for both the sharing of behavior and data among the integrated systems. Data sharing occurs when a system requests individual or collections of business objects by some filtering identifier. Behavior is shared by submitting a request to another system with well-defined properties used to direct the outcome, which may or may not generate data for the requestor.

These HTTP-based integration techniques are usually referred to as point-to-point integrations following the request/response pattern restricted to two systems, or web services, interacting synchronously. Such point-to-point integrations are easy to follow in simple architectures. But as the number of web services grows and capabilities evolve, these architectures risk becoming tightly coupled, deeply nested, synchronous call stacks with difficult to predict response times. However, recent advancements in the HTTP/2 protocol are being utilized in the case of gRCP, which allows for the duplexed flow of messages that may be routed and consumed asynchronously.

Messaging-based integration

This integration pattern offers both asynchronous and synchronous approaches where an application publishes messages to a middleware component, like a queue or message broker, for the purposes of either notification or state transfer. A message can be published for notification purposes to either initiate a process or inform other systems that something of importance occurred. Messages are used for state transfer to facilitate the movement of key datasets for consumption in partner systems so data can be shaped to best suit each app’s need.

Messaging-based integration is often referred to as publish and subscribe based messaging and promotes loose coupling because the messaging infrastructure serves as a layer of indirection, keeping the producing application from making impeding assumptions about any of its consumers. Instead, the rules of interaction revolve around knowing the named message channel and adhering to a common data format. Messaging can be either point-to-point where only a single application publishes a message to a queue consumed by another single application, or they can be broadcast style where the message is published to a topic or channel and consumed by many different pluggable, purpose-driven consuming applications in an evolutionary fashion.

What is driving the demand for systems integration?

Systems integration has long been an important component of delivering technology without hazardous duplication of functionality and data throughout the organization. However, I’ve seen a notable proliferation of integration use cases that seem to be riding the tailwinds of explosive growth and innovation resulting from the cloud computing wave. Lower barriers to entry made possible by cloud computing led to new market challengers introducing innovative niche SaaS offerings that also benefit from a wide variety of cloud-based integration solutions and appealing free trials for no risk experimentation. Technology leaders are realizing this and benefiting from using integration to harness these niche SaaS capabilities significantly faster and often cheaper than building them in house, making integration a prudent solution to provide competitive advantage.

How to do cloud engineering and systems integration well

When it comes to cloud engineering and systems integration adopting DevOps culture along with the processes commonly associated with it have been shown to increase the likelihood of successful outcomes. High on the best practices list is to use a version control system (VCS) and infrastructure as code (IaC) to represent as much as possible in your environment like infrastructure, application and configuration. This will be a valuable single source of truth around all aspects of the architecture driving consistency and integrity. Once version control is in place and everything is expressed in terms of IaC, then deployments can be automated with continuous integration and continuous delivery (CI/CD) technologies like Jenkins, CircleCI or GitHub Actions. It’s also important to emphasize automated testing and static code analysis tools to elevate the quality of software being delivered and provide the guardrails for future evolution.

To help establish the DevOps tooling and technologies I just mentioned, along with facilitating other best practices, many organizations have introduced enablement teams. An enablement team is usually a cross-functional team that includes people with a variety of experiences along with a common skill in the specific domain of interest, like cloud computing or systems integration. These enablement teams help influence and build out the appropriate DevOps capabilities that will best support the organization, develop reusable patterns used by implementation teams and provide training along with consultation as needed.

When establishing enablement teams, it’s important to keep expectations clear that the enablement team’s goal is not to be a centralized cloud or integration implementation team that carries out all duties in their area. This is sure to slow the pace of the technology delivery constrained to the speed of the enablement team and the maximum number of projects it can handle at a time. Instead, the goal should be to have a small, nimble team that can facilitate a growing number of implementation teams to effectively work within a given domain, not throw work over the proverbial wall at each other.